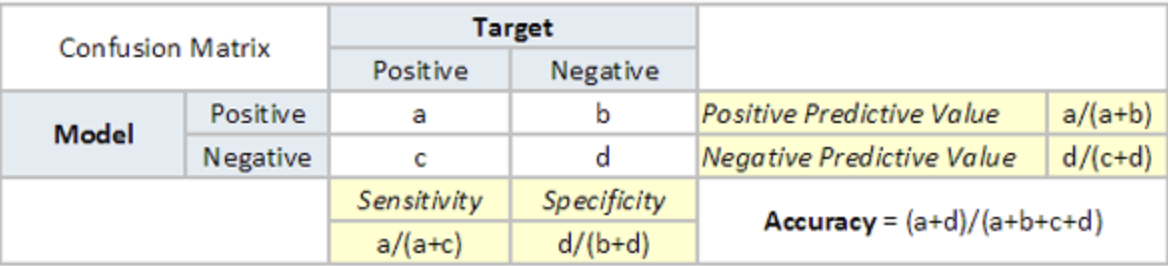

- Confusion matrix - A confusion matrix is an matrix, where is the number of predicted classes. It is extremely useful for measuring precision-recall, Specificity, Accuracy, and most importantly, AUC-ROC curve.

Type 1 Error =

Type 2 Error =

- Accuracy - Fraction of correct predictions made by the model over the total number of predictions

- Precision - The ratio of correctly predicted positive observations to the total predicted positives. It is useful when the cost of false positives is high.

- Recall (Sensitivity or True Positive Rate) - measures the fraction of true positive predictions out of the total number of actual positive instances. It is useful when the cost of false negatives is high.

- F1 score - harmonic mean of precision and recall, providing a single metric that balances both measures. It is often used in cases where both precision and recall are important. \

- ROC - AUC (Receiver Operating Characteristic - Area Under Curve) The ROC curve is plotted with TPR vs. FPR at various threshold settings, and the AUC represents the likelihood that the model ranks a random positive instance higher than a random negative instance. The AUC-ROC represents the probability that the model will rank a random positive instance higher than a random negative instance.

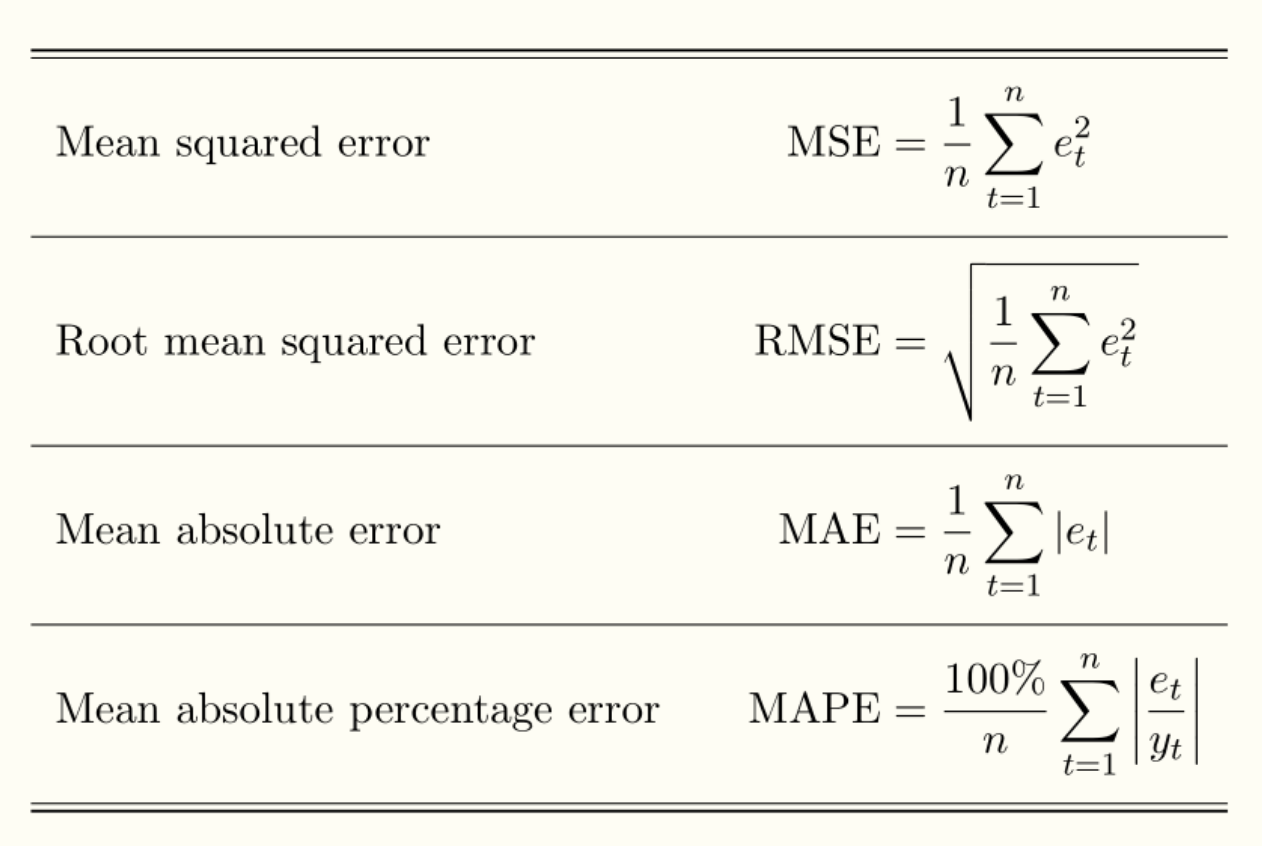

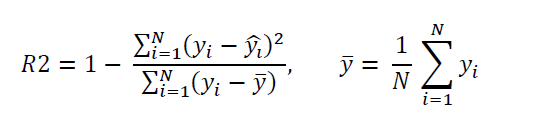

- Regression metrics